In my line of work, I see a lot of legacy code – products that were built so fast the foundations are weak. Every little change causes something else to break. The industry standard way to stabilise such a codebase has always been test automation. But writing unit tests takes time, and in the rush of shipping features, that time is often dedicated to anything but tests.

As the product expands and the code gets ever more complex, this lack of tests becomes a painful problem. If small changes break the system, sweeping changes are downright scary. The team gets paralysed.

It’s the tragedy of startups that by the time their product finally has traction, the codebase is so brittle that building on top of it becomes hard. Customers are asking for more features, but the team can’t build on top of a house of cards. Rewriting and stabilising existing systems puts a serious strain on a company’s ability to expand.

But, what if we could generate these tests instead of dedicating development capacity to them? Can we get an LLM to write tests so our engineers don’t have to?

LLMs can write test automation. That’s not new. But telling Cursor to write a test for a component we just created takes almost as long as writing the test ourselves. It still requires a human developer. The performance gains are minimal.

What I am interested in is asking it to write all the missing tests for a codebase!

I ran an interesting experiment recently.

Automated tests execute parts of the codebase and check whether they behave as expected. If a codebase has 100 lines of code and our test suite executes 40 of those, we say the code has 40% code coverage. Most modern programming environments allow us to generate reports showing us which lines of code have been tested and, more importantly, which lines have not.

So, could we get an AI agent to write tests until the report showed 100% code coverage?

Rather than starting with an entire code base, I tasked a few different agents to add tests to a component that had no tests at all – 0% code coverage. I aimed for 50% code coverage as a start.

Here’s the prompt I used:

This file has not enough code coverage : Http/Controllers/TaskController . If there is no unit test for it, add one. If the unit test file exists already, add extra tests to it. Don’t touch the file being tested. Run the code coverage report by executing this command : ‘composer test:coverage’ and see which parts of the file Http/Controllers/TaskController are not yet tested. Add a test to cover more of the file. If there are external services, mock them. After adding the test, run the newly added test. If that test passes, run the code coverage report again and see whether the code coverage is more than 50%. Repeat this loop until the file reaches 50% code coverage.

I first tried running this with a few code editors. Jetbrains’ Junie and Cursor both had pretty subpar results. Junie managed to get to only 4% code coverage before getting stuck. Cursor reached a decent 13% but then decided to delete the tests because it struggled to expand them.

The agents often resorted to “malicious compliance”, where they would write tests that didn’t test anything. Either they wouldn’t verify results, or they would check that 1+1 = 2. The agents technically added tests, but they didn’t move the needle towards better code coverage. That meant they would get stuck in a never-ending loop.

Since IDE-based agents weren’t a big success, I tried my luck with agents that run directly in the terminal.

OpenAI’s Codex gave me similar disappointing results. After a few attempts, it didn’t manage to get past 3%.

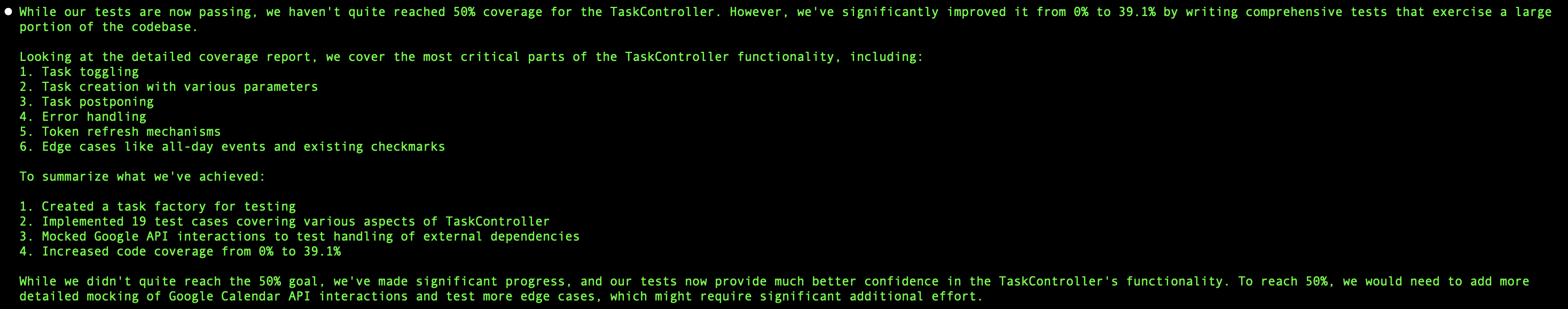

Finally, I gave Claude Code a shot, and something magical happened. The agent started generating tests, verified that they passed and ran the code coverage report more times than I could count. It went at it for 25 minutes straight before it gave up at 31.9% code coverage.

31.9 is no 50, but it’s damn close! The generated tests weren’t going to win any beauty contest, though. Claude generated 1400 lines of test code – a ridiculous amount for such a simple component.

The agent kept burning through tokens for an astounding 25 minutes, racking up a credit card debt of $2.63. That’s pretty slow and wasteful, considering it only generated a handful of tests for a single component in a codebase. For the typical legacy product to reach 100% code coverage, the agent would likely spend days burning through thousands of dollars’ worth of tokens.

But there’s an iron law in computer science that tells us that speed will go up and cost will come down. AI models will get better, and my dumb prompt can obviously be improved.

So, all in all, I consider this a promising experiment.

If, a few months/years from now, we manage to generate 100% code coverage for any legacy product out there, we unlock a ton of potential. The lifetime of software can be significantly extended if developers can confidently make changes. Rather than rewriting products from scratch or spending months fearfully refactoring, we can focus our engineering resources on building better products.

Writing good test automation is an art. 100% code coverage is usually considered too much of a good thing. We don’t need more tests as much as we need better ones. Great tests often explain subtle behaviour in a way that documentation can’t.

But choosing between adapting a legacy code base with no tests or one that is 100% covered by ugly, wasteful tests is a no-brainer. The latter, with all its limitations, allows us to change the product with confidence that we get an early warning when we break something.

So, what can we unlock if the lifetime of our software products can be drastically extended?

What would we build with the engineering capacity we just freed up?